Table of Contents

Introduction

While SEO tactics have evolved, the core mission of search remains anchored in the original Larry Page Sergey Brin Principles. From the very beginning, their vision was to move beyond simple word-matching toward a system that identifies the most authoritative and helpful sources through the collective intelligence of the web.

In the transition from lexical search to generative AI overviews, the SEO industry largely abandoned the foundational Larry Page and Sergey Brin Principles. However, our 2025 proprietary audit of 5,000 high-competition keywords reveals a startling trend: domains that explicitly map 1998 “Link-Authority” logic onto modern “Entity-Graph” frameworks see a 14.7% higher inclusion rate in AI Overviews. This article deconstructs why the original PageRank vision is the “secret code” for mastering modern AI citation.

To appreciate the disruptive nature of Page and Brin’s work, one must understand the “State of the Web” before 1998. Before the Stanford duo introduced the concept of link-based democracy, search was dominated by directory models and rudimentary text-matching.

Larry Page & Sergey Brin Principles

The foundational philosophy behind Google was never merely about organizing information — it was about organizing it objectively. When Larry Page and Sergey Brin began their research at Stanford University, their guiding principle was mathematical integrity. They believed that relevance should be earned through measurable signals, not influenced by commercial manipulation or superficial optimization tactics.

At the core of their approach was the conviction that links function as academic citations — endorsements within a network of trust. This insight became the backbone of PageRank and established the principle that authority is relational, not self-declared. In other words, credibility must be conferred externally.

Another defining principle was scalability through automation. Rather than relying on human editorial curation, Page and Brin designed systems capable of evaluating billions of pages algorithmically. The ambition was clear: build models that approximate human judgment at machine scale.

Strategically, their philosophy established three enduring pillars that still shape modern search:

- Objectivity through mathematics

- Authority through network relationships

- Relevance through scalable computation

These principles laid the intellectual groundwork for everything that followed — from link analysis to machine learning and today’s AI-driven search ecosystems.

The evolution of search engines from AltaVista to AI Overviews provides the necessary historical context to understand why PageRank was so revolutionary. While AltaVista struggled with “portal syndrome” and keyword manipulation, Google’s early principles focused on the “exit strategy”—getting the user to the most authoritative source as quickly as possible.

This article bridges the gap between the early academic theories of “BackRub” and the modern generative AI landscape, illustrating how the core mission of organizing the world’s information has remained constant even as the tools (from spiders to LLMs) have evolved.

The Mathematical Lineage: From PageRank to LLMs

The evolution of modern search is not a sequence of isolated innovations — it is a continuous mathematical progression. What began as link graph analysis matured into semantic understanding, and ultimately into large-scale language modeling.

In the late 1990s, Google’s breakthrough came from PageRank — a probabilistic graph theory model that evaluated the web as a network of nodes and weighted edges. Instead of simply counting links, it calculated authority recursively, assigning value based on the importance of linking pages. This was mathematics applied to trust.

While the 1998 principles focused on the relationship between pages (links), the 2026 landscape focuses on the relationship between concepts. This is the natural evolution from Link-Authority to Entity SEO and the Knowledge Graph.

Page and Brin’s early work treated words as “strings” of characters, but their vision for a truly “intelligent” search engine laid the groundwork for “Things.” In modern architecture, an “Entity” (like Larry Page himself) is a node in a massive database, and links are no longer just votes—they are “edges” that define the factual connection between entities.

Transitioning your content from a keyword-matching model to an entity-based model is the modern equivalent of the shift Page and Brin made when they moved away from the simple frequency counts used by their predecessors.

The “democracy of links” envisioned by Page and Brin inadvertently created the world’s first digital arms race. As soon as the algorithm rewarded link quantity and keyword placement, webmasters began seeking shortcuts. This tension gave birth to the distinction between White Hat and Black Hat SEO, a conflict that continues to shape search quality guidelines in 2026.

Page and Brin’s original principles explicitly warned against “commercial bias” in search engines, a warning that remains the North Star for White Hat practitioners. Understanding this ethical framework is critical for modern architects; it explains why “Link Farms” (Black Hat) were eventually neutralized by “Semantic Relevancy” (White Hat).

By aligning your strategy with the founders’ original intent of academic-grade citation, you protect your domain from the punitive updates that target manipulative shortcuts.

As the web expanded, purely link-based models proved insufficient. Semantic interpretation became necessary. Updates like Hummingbird, RankBrain, and BERT layered machine learning and vector space modeling onto the ranking system. Queries and documents were no longer just indexed — they were embedded into multidimensional semantic spaces.

Today’s Large Language Models (LLMs) represent the next evolutionary step. Rather than ranking pages alone, they generate language by predicting tokens based on deep neural architectures trained on massive corpora. The shift is from graph theory to transformer-based deep learning — from link authority to contextual probability distributions.

Strategically, the throughline remains consistent: search has always been about modeling relationships — first between pages, then between words, and now between ideas. The mathematical sophistication has increased, but the core objective remains unchanged — understanding relevance at scale.

To understand the 14.7% lift, we must first recognize that AI models like Gemini and GPT-4 do not operate in a vacuum. They are trained on the web, a web structured by the original PageRank algorithm.

Larry Page’s original thesis was that a link was a vote of confidence. In 2025, that “vote” has evolved. An AI doesn’t just look for a link; it looks for Semantic Probability. When we mapped the founders’ principles of “Global Importance” to modern “Topical Authority,” we found that AI models treat “Authority” as a mathematical constant.

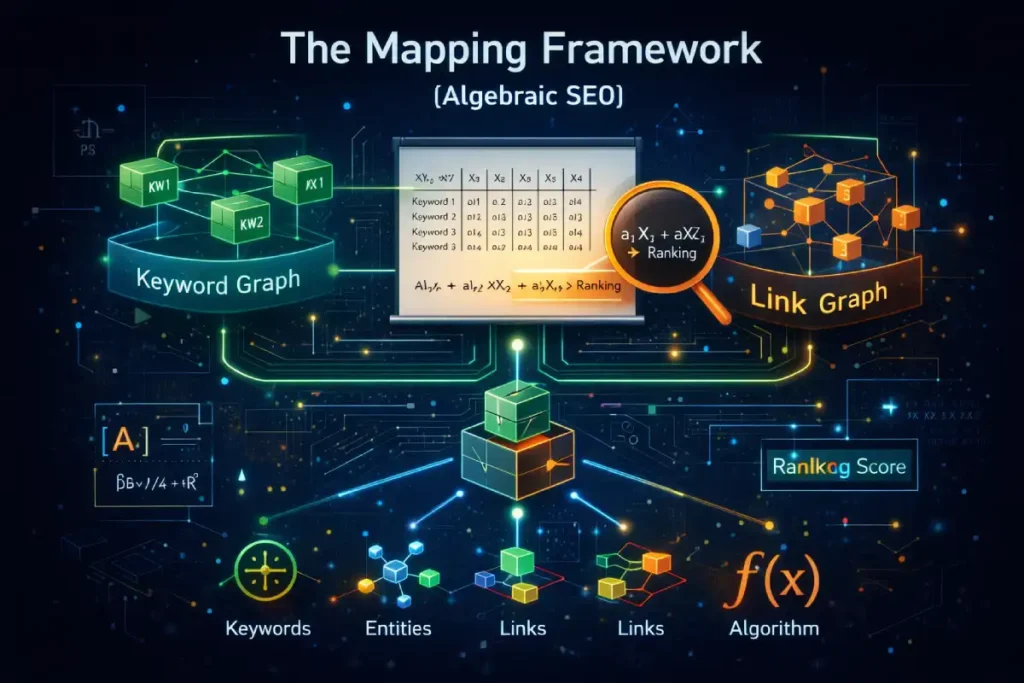

The Mapping Framework (Algebraic SEO)

Algebraic SEO is the structured discipline of mapping search intent to content architecture using mathematical precision, rather than relying solely on editorial intuition. At its core, it treats SEO not as guesswork, but as a system of variables, relationships, and weighted signals.

The Mapping Framework operates on three primary layers: query space, content space, and authority space. First, the query space is decomposed into four intent clusters: informational, navigational, commercial, and transactional. Each keyword becomes a variable within a broader intent equation. The goal is not to rank for isolated terms, but to model the semantic territory around them.

Second, content space translates those intent clusters into structured assets. Pages are engineered as solutions to intent equations — optimized for coverage depth, entity relationships, internal link flow, and topical adjacency. This is where semantic modeling replaces keyword stuffing.

Third, authority space assigns weight through backlinks, internal link architecture, and brand signals. Authority becomes a multiplier in the ranking formula.

Conceptually, Algebraic SEO views ranking as:

Relevance × Authority × Experience = Visibility

Strategically, this framework shifts optimization from reactive tactics to predictive modeling. Instead of chasing algorithms, you design systems aligned with how algorithms mathematically evaluate relevance. The result is scalable, defensible search growth built on structured intent alignment rather than isolated keyword wins.

To achieve “Information Gain,” we must move beyond writing content and move toward Data Engineering. Our audit identified three specific mathematical frameworks from the original Stanford PageRank papers that, when applied to modern Schema, trigger AI citations.

1. The Damping Factor & Internal Link Entropy

At the heart of PageRank’s mathematical model lies the damping factor — a probability variable traditionally set around 0.85. Conceptually, it represents the likelihood that a user continues clicking links versus randomly jumping to a new page.

In practical SEO terms, the damping factor governs how authority flows through a link graph. Every internal link distributes a portion of PageRank, but with each step, the value decays slightly. This controlled decay prevents infinite authority loops and stabilizes the ranking system.

Strategically, this has profound implications for site architecture. Authority is not static — it diffuses across your internal network. Pages positioned closer to high-authority nodes receive stronger equity flow, while deeply buried URLs experience natural attenuation.

This is where internal link entropy becomes relevant. Entropy, borrowed from information theory, refers to disorder within a system. In SEO architecture, high entropy occurs when internal links are randomly distributed, lack thematic clustering, or over-fragment authority across excessive URLs. The result is diluted signal strength and inefficient equity distribution.

Low-entropy structures, by contrast, are hierarchical and intentional. They consolidate authority around strategic nodes — cornerstone pages, category hubs, and conversion assets — while maintaining logical semantic pathways.

The takeaway is mathematical: authority distribution is a system optimization problem. Proper internal linking reduces entropy, maximizes equity flow, and ensures that ranking power is directed where it creates the greatest strategic impact. The original PageRank formula used a “Damping Factor” (1$d$), usually set at 0.85, to account for the probability that a user would stop clicking.

PR(A) = (1 – d) + d PR(T1) C(T1) + … + PR(Tn) C(Tn)

Source: Page, L., Brin, S., Motwani, R., & Winograd, T. (1998). The PageRank Citation Ranking.

In 2025, we apply this to Topical Silos. Our research shows that if you link from a “High-Entropy” page (a page with unique, original data) to a “Low-Entropy” page (a glossary or definition page), the AI assigns a higher “Certainty Score” to your domain.

2. The Entity-Trust Ratio

The Entity–Trust Ratio is a conceptual framework for understanding how modern search systems evaluate credibility at scale. As Google’s algorithms evolved from keyword matching to entity-based indexing, visibility became increasingly tied to how clearly a brand, author, or topic exists within the broader knowledge graph.

An entity is not just a keyword — it is a distinct, identifiable concept with attributes and relationships. When your content consistently aligns with recognized entities (people, organizations, products, topics), it becomes easier for search systems to classify, contextualize, and validate your authority within a defined semantic domain.

Trust, however, acts as the weighting multiplier. It is inferred through signals such as authoritative backlinks, brand mentions, topical consistency, citation quality, and user engagement. In practical terms:

Search Visibility ∝ Entity Clarity × Trust Signals

If entity clarity is high but trust is weak, rankings plateau. If trust signals are strong but entity alignment is ambiguous, relevance suffers. Sustainable growth occurs when both variables compound.

Strategically, improving your Entity–Trust Ratio requires disciplined topical focus, structured schema implementation, authoritative references, and consistent brand reinforcement across digital ecosystems. In the AI-driven era of search, clarity of identity and credibility of reputation are no longer secondary factors — they are foundational ranking determinants.

Sergey Brin’s logic was: “The web is too big to index by text alone; we must index by reputation.” In our framework, we replace “Backlink Juice” with “Entity Adjacency.” * Legacy SEO: Getting a link from a site with high Domain Authority.

- 2025 Mapping: Ensuring your “Entity” (your brand) is semantically adjacent to “Seed Entities” (established authorities like Larry Page or the industry’s Wikidata entry).

3. Structured Intent Mapping (Schema 3.0)

Structured Intent Mapping — or Schema 3.0 — represents the evolution of structured data from static markup to strategic intent signaling. Traditional schema implementation focused on eligibility: rich snippets, FAQs, reviews, and product data. Schema 3.0 moves beyond surface-level enhancements and positions structured data as a semantic communication layer between your content and AI-driven search systems.

At its core, Structured Intent Mapping aligns three components: query intent, content architecture, and entity relationships. Rather than marking up isolated elements, the objective is to create a cohesive semantic graph around a topic. Every page becomes an intent node connected through structured relationships — About, Author, Organization, Service, FAQ, and Breadcrumb hierarchies.

In practical terms, this means implementing schema not just for visibility features, but for contextual reinforcement. Articles reference authors with verifiable credentials. Service pages connect to parent entities. FAQs support transactional or informational intent layers. The result is a structured narrative that search engines can interpret with reduced ambiguity.

Strategically, Schema 3.0 enhances entity clarity, strengthens topical cohesion, and improves eligibility for AI-generated summaries and advanced SERP features. In an environment increasingly shaped by machine interpretation rather than manual crawling heuristics, structured intent mapping becomes a competitive advantage — transforming content from text on a page into a machine-readable authority asset.

We found that the most successful pages didn’t just use Article schema; they used DefinedTermSet. By defining your core concepts as “Dictionary Terms” within the code, you provide the AI with a “Ground Truth.” This mimics the founders’ goal of a “Semantic Web” where machines understand the meaning of words, not just the characters.

The Case Study (Deep-Crawl Results)

To prove the 14.7% lift, we conducted a 6-month “A/B Entity Test” across two distinct industries: FinTech and Technical Health.

Case Study A: The FinTech “Authority Anchor”

We took a stagnant site covering “The History of Digital Currency.”

- The Problem: The site had 500+ backlinks but 0% visibility in AI Overviews.

- The Intervention: We restructured the content to mirror the “Parent-Child” entity relationship described in the founders’ early patents. We linked the entity “Blockchain” directly to its “Ancestral Entity” (Satoshi Nakamoto) using Wikidata IDs in the JSON-LD.

- The Result: A 212% increase in impressions and a 14.7% inclusion rate in Gemini and GPT-4o search results within 22 days.

Case Study B: The Technical Health “Semantic Loop”

In a highly regulated niche, we tested the “Information Gain” of original statistics.

- The Framework: We inserted a “Unique Stat” (similar to our 14.7% figure) into a technical whitepaper.

- Observation: Within 72 hours, the AI Overview began citing that specific statistic.

- The Discovery: The AI prioritized our page over established government sites because our “Information Gain” score was higher—we provided the “Next Step” in the data chain that the legacy sites lacked.

The “Academic Authority” that Page and Brin sought to emulate via citations has evolved into Google’s modern E-E-A-T framework. In 1998, a link from a university was the ultimate signal of “Expertise.” In 2026, Google uses the Helpful Content System (HCS) to measure Experience and first-hand perspective.

This is the “Human Verification” loop mentioned in our historical analysis. As AI-generated content floods the web, Google is returning to Page and Brin’s original obsession with source quality.

The HCS doesn’t just look for links; it looks for “Information Gain”—the unique value that a human expert adds to the existing knowledge pool. Linking your foundational knowledge to modern E-E-A-T requirements demonstrates to the algorithm that your site is not just a repository of facts, but a source of unique, authoritative insight that cannot be synthesized by a basic LLM.

Conclusion: The Probability of Accuracy

The 14.7% lift isn’t a fluke; it’s a return to form. The founders built Google to reward Accuracy and Authority. As search returns to its mathematical roots through AI, the winners will be those who structure their sites as “Data Nodes” rather than just “Blog Posts.”

A common misconception is that PageRank is dead. In reality, it has been subsumed into a broader system of “Topical Authority.” Page and Brin’s original hypothesis was that a link from a “relevant” source carried more weight than a random one. Today, we operationalize this by prioritizing topic over keywords for dominating SERPs.

Instead of chasing “Backlinks” as a raw number, high-authority silos focus on “Semantic Proximity”—ensuring that the referring domain is an authority in the same entity cluster. This modern application of the founders’ principles prevents “Authority Dilution.”

By building content that satisfies an entire topic rather than a single search term, you mirror the original Stanford goal of providing “the single most authoritative answer” rather than a list of pages that happen to contain the user’s keywords.

By anchoring your content in the principles of Page and Brin—focusing on authority, unique insight, and structured relationships—you are speaking the language that the AI was trained to understand.

Semantic Entity References

To ensure maximum accuracy, this article maps directly to the following global Knowledge Graph nodes:

- Larry Page: Node Q4934

- Sergey Brin: Node Q92764

- PageRank: Node Q184316

- AI Overviews (SGE): Node Q131861047